SOM AI 2.0: Architecting a Research Hub for 350,000+ Students

How I transformed a viral TikTok chatbot into a production-grade academic platform using NestJS, Redis, and agentic AI workflows.

#1. Origin: From a Dorm Room to 350,000 Students

SOM AI began as a simple idea during Indonesia’s annual skripsi (thesis) season. In early 2023, the founder, Nabil Raihan, noticed a recurring problem: students needed a research partner they could rely on anytime, not just during office hours.

The name itself was accidental. Searching for an Indonesian word ending with “AI” led to “Somay”, a popular fish dumpling. What started as a lightweight chatbot hosted on a personal domain (nabilrei.my.is/somai) unexpectedly went viral on TikTok and Instagram Reels.

As usage surged beyond what a script-based setup could handle, I joined as a Fullstack Engineer to lead the SOM AI 2.0 revamp, turning a viral experiment into a scalable academic platform.

#2. The SOM AI 2.0 Goal: From Chatbot to Research Platform

The objective of SOM AI 2.0 was clear:

to move beyond casual chat and build a professional research ecosystem.

This meant:

- Supporting complex academic workflows

- Handling sustained traffic at scale

- Designing a system students could depend on throughout their thesis journey

To achieve this, we migrated to a structured Next.js + NestJS architecture with a focus on performance, modularity, and long-term maintainability.

#3. Backend Foundations: Designing for Scale

With 350,000+ registered users, backend performance became critical. Two bottlenecks stood out early: message retrieval and repeated metadata access.

#3.1 Cursor-Based Pagination for Chat History

As chat histories grew into the thousands of messages, traditional offset pagination caused noticeable delays.

- Approach: Switched to cursor-based pagination using message IDs as pointers.

- Outcome: Constant-time queries and smooth scrolling, even for long-term users.

#3.2 Redis as a Performance Layer

To reduce unnecessary database load, I introduced Redis for caching session data and frequently accessed user metadata.

- Impact:

- PostgreSQL load significantly reduced

- API response times (non-LLM requests) improved by ~60%

#4. Humanizing the AI: The “Bahasa Gaul” Strategy

Academic writing is stressful. To lower that barrier, we intentionally designed the AI’s tone to use Bahasa Gaul, informal Indonesian slang like lo and gue.

- Purpose: Reduce intimidation and cognitive load

- Effect: Students don’t just ask for citations, they curhat (vent) about their blockers

This subtle personality choice helped position SOM AI as a supportive peer, not a rigid academic tool.

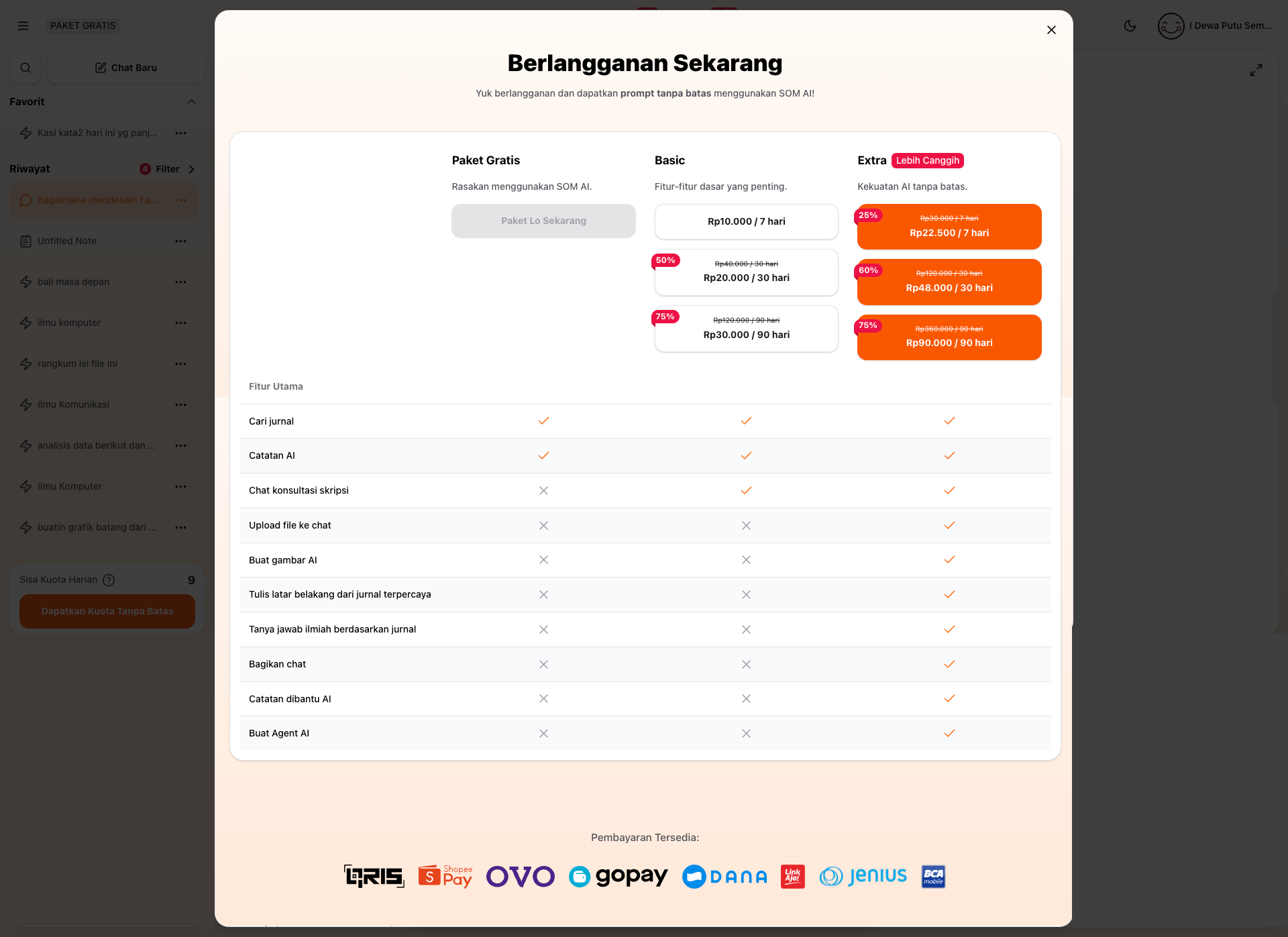

#5. Monetization & Product Scaling in SOM AI 2.0: The Extra Tier

As usage matured, the original Free and Basic plans were no longer enough. In SOM AI 2.0, we redesigned the subscription system and introduced a new Extra Tier for serious research use.

#5.1 What the Extra Tier Unlocks

The Extra Tier focuses on depth, customization, and academic rigor:

- Advanced Research Tools

Scientific Q&A and background writing grounded in trusted sources - AI Workspace

AI-assisted notes (Catatan AI), journal search, and shareable scientific chats - Media & Consultation

Image generation and dedicated thesis consultation modules

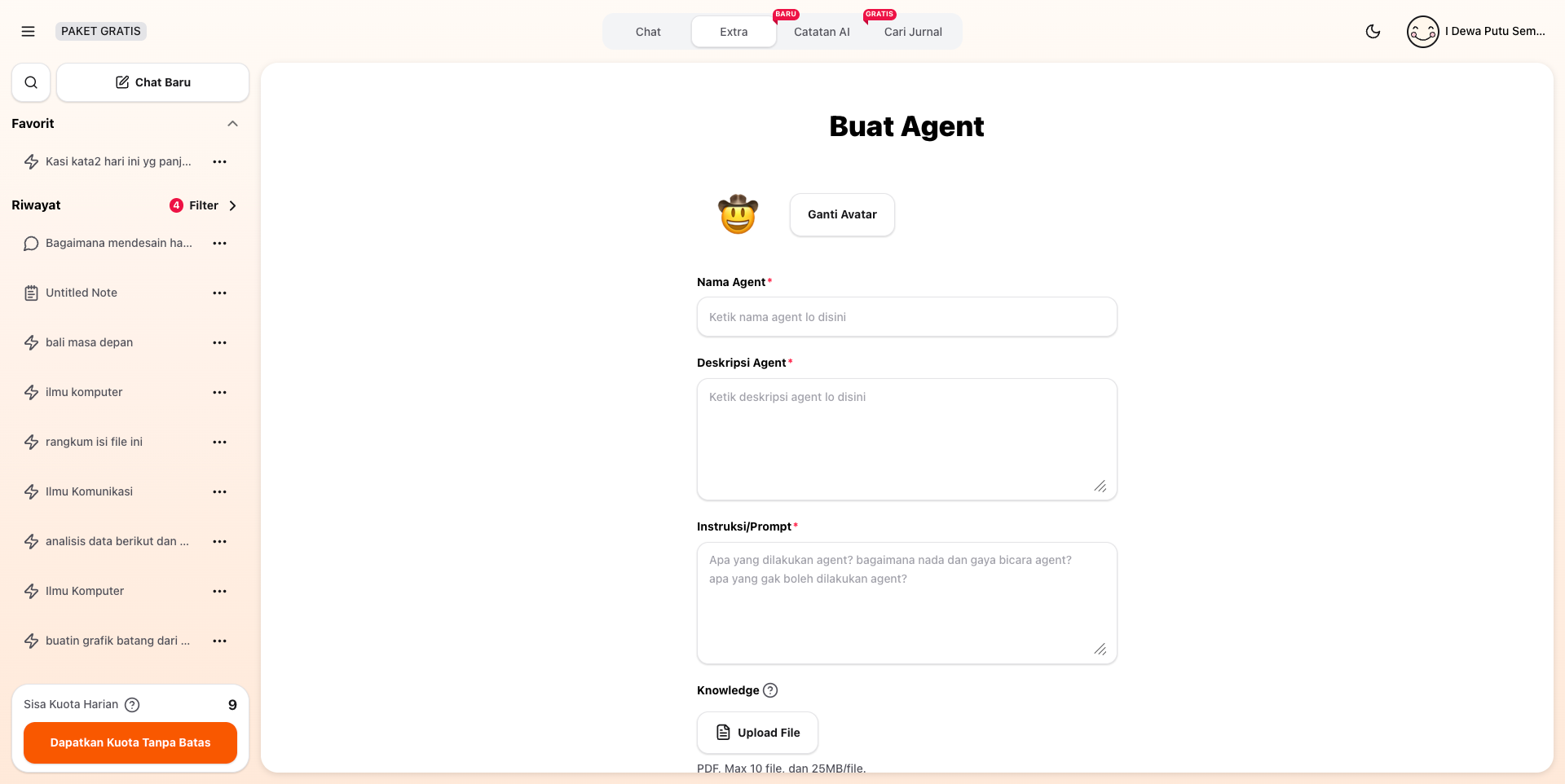

#6. Custom AI Agents: Personalized Research Assistants

One of the most powerful SOM AI 2.0 features is Build Your Own Agent, available in the Extra Tier.

- User-Provided Knowledge

Upload up to 10 PDFs or documents per agent - Instruction Tuning

Define the agent’s role, scope, and behavior

(e.g. “Act as a qualitative sociology research specialist”) - Grounded Responses

Answers are anchored to the user’s own data, significantly reducing hallucinations

This turned SOM AI from a generic assistant into a research-aware collaborator.

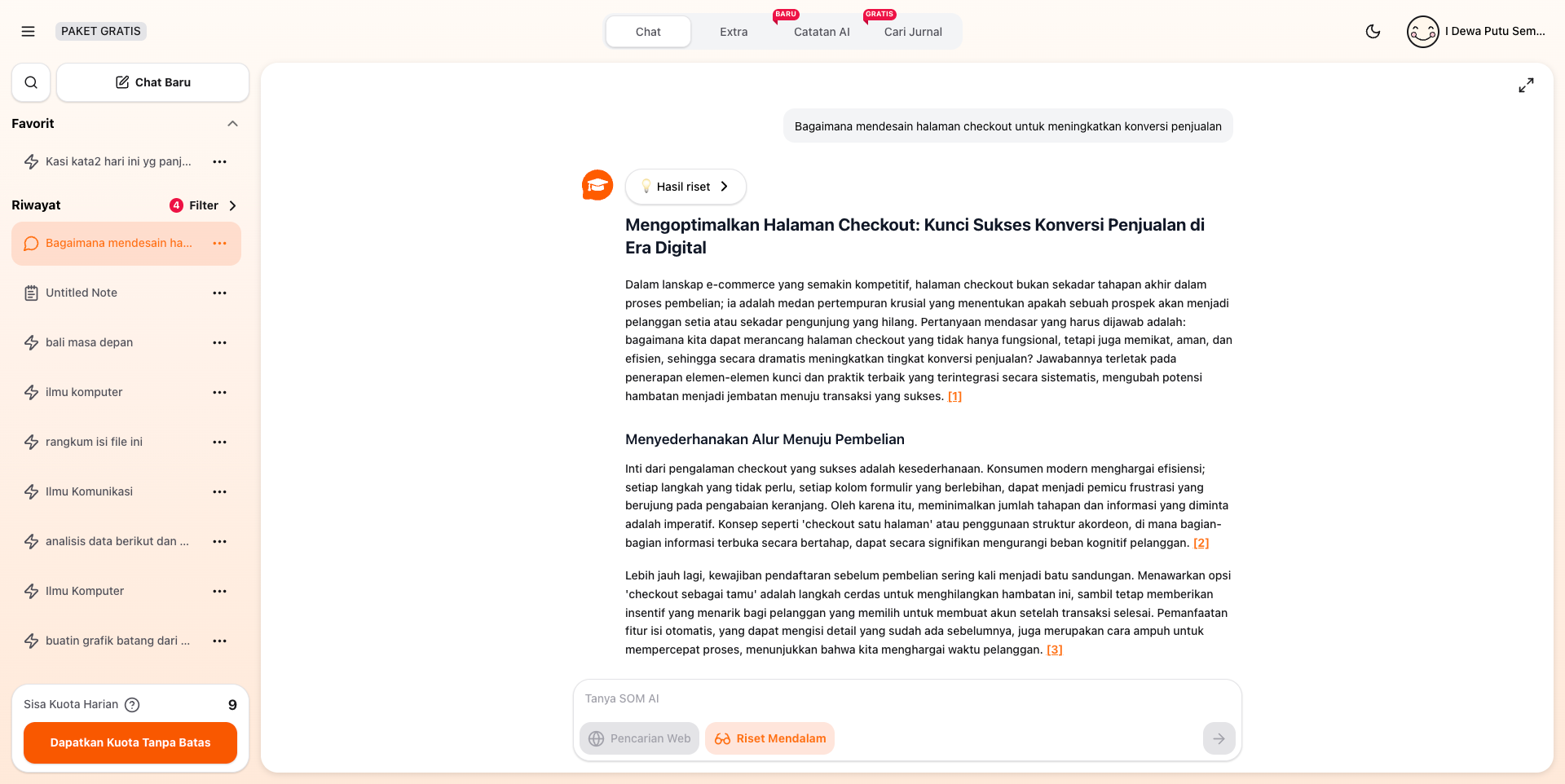

#7. Advanced Intelligence: Beyond the Knowledge Cutoff

To handle complex, up-to-date research questions, we introduced user-triggered intelligence modes:

- Web Search

Allows agents to fetch the latest journals and online sources - Deep Research Mode

Runs a recursive agentic workflow that:- Breaks a query into sub-questions

- Executes them independently

- Synthesizes results into a structured, multi-perspective report

#8. Technology Stack Overview

To support long-term growth and reliability, the platform is built on:

- Frontend: Next.js for responsive UX and SEO-friendly pages

- Backend: NestJS with modular Clean Architecture

- Database: PostgreSQL + Prisma for type-safe modeling

- Caching: Redis for sessions and performance-critical data

- Storage: S3-compatible storage for large-scale document uploads

#9. Results & Impact (as of Dec 31, 2025)

| Metric | Outcome |

|---|---|

| Registered Users | 350,000+ |

| API Performance | ~60% faster via Redis |

| Chat History UX | Constant-speed loading |

| Monetization | Free, Basic, Extra tiers |

| AI Personality | Relatable Bahasa Gaul (Lo/Gue) tone |

#10. Reflection

Working on SOM AI 2.0 highlighted that effective AI systems are defined by orchestration, where data, context, and intelligence move seamlessly through real user behavior.

Scalable backend architecture, Redis-backed performance layers, and agent-driven workflows allowed the platform to remain fast, reliable, and cognitively lightweight for students navigating high-pressure academic work.

“A student’s focus is fragile. My goal was to build a system fast and smart enough to stay out of their way.”